Distributed Systems

Distributed Systems all materials TU BCA

Distributed Systems Concepts Quiz

Unit 1: Introduction

Distributed System: Comprehensive Note

1. Introduction (4 Hours)

A distributed system is a collection of independent computers that appear to the users as a single coherent system. These systems aim to share resources, enhance performance, and ensure fault tolerance, making them ideal for modern applications like cloud computing, online services, and global communication platforms.

1.1 Characteristics of Distributed Systems

Distributed systems have unique characteristics that differentiate them from standalone systems:

-

Resource Sharing

- Enables multiple users to share hardware, software, and data resources.

- Examples include file servers, printers, and shared databases.

-

Concurrency

- Multiple processes can run simultaneously, improving efficiency and utilization.

-

Scalability

- The system can handle increased workload by adding more resources, such as servers or storage.

-

Fault Tolerance

- The system can recover from component failures without significant service interruption.

-

Transparency

Distributed systems aim to hide complexity from users. There are several types of transparency:- Access Transparency: Users access resources uniformly regardless of location.

- Location Transparency: Users are unaware of where the resource is physically located.

- Replication Transparency: Users don’t need to know if a resource is replicated.

- Concurrency Transparency: Users do not notice that others are using the same resource.

- Failure Transparency: The system handles failures gracefully.

-

Openness

- The system can be extended or modified with minimal effort, often through standard protocols.

-

Heterogeneity

- Components may differ in hardware, operating systems, or programming languages.

1.2 Design Goals

The goals of a distributed system design are fundamental to its operation and success:

-

Transparency

- Simplifies user interaction by abstracting underlying complexity.

-

Scalability

- Supports growth in users, resources, or processes without performance degradation.

-

Fault Tolerance

- Provides reliability by detecting, isolating, and recovering from failures.

-

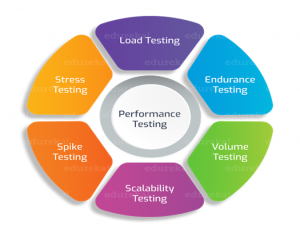

Performance

- Ensures responsiveness and low latency through optimized communication and computation.

-

Security

- Protects against unauthorized access and ensures data confidentiality and integrity.

-

Resource Management

- Allocates resources efficiently, avoiding bottlenecks.

-

Flexibility

- Adapts to changing workloads, configurations, and demands.

1.3 Types of Distributed Systems

Distributed systems are categorized based on their purpose and architecture:

-

Distributed Computing Systems

- Focus on computational tasks and parallel processing.

- Examples: Cluster computing, grid computing.

-

Distributed Information Systems

- Handle large-scale data storage and retrieval.

- Examples: Online transaction processing (OLTP), database management systems.

-

Distributed Pervasive Systems

- Integrate distributed systems into everyday life with IoT devices.

- Examples: Smart homes, wearable devices.

-

Real-Time Distributed Systems

- Operate under time constraints for applications like military and robotics.

- Examples: Air traffic control systems.

-

Peer-to-Peer Systems

- Decentralized systems where all nodes are equal, sharing resources directly.

- Examples: BitTorrent, blockchain.

1.4 Case Study: The World Wide Web

The World Wide Web (WWW) is one of the most widely used distributed systems. It exemplifies many principles of distributed systems in its operation and design.

-

Overview

- The WWW is a distributed information system that allows access to multimedia content via a browser using the HTTP/HTTPS protocol. It is built on top of the Internet.

-

Key Characteristics

- Resource Sharing: Hosts and clients interact to share web pages, multimedia, and applications.

- Scalability: The WWW supports billions of users simultaneously.

- Fault Tolerance: Built-in redundancy in web hosting ensures availability.

- Transparency: Users access resources without knowing their physical location.

-

Components

- Web Browsers: User interface for accessing the web (e.g., Chrome, Firefox).

- Web Servers: Host web pages and provide content to browsers (e.g., Apache, NGINX).

- Search Engines: Facilitate finding information on the web (e.g., Google, Bing).

-

Design Challenges

- Latency: The time delay in delivering web content due to network congestion or server overload.

- Security: Protecting user data and preventing cyber-attacks like phishing and DDoS.

- Data Consistency: Ensuring users receive up-to-date information.

-

Applications

- E-commerce: Amazon, eBay.

- Social Media: Facebook, Instagram.

- Education: Online learning platforms like Coursera.

Conclusion

Distributed systems play a crucial role in modern computing, enabling seamless resource sharing, scalability, and reliability. Understanding their characteristics, goals, and types provides a strong foundation for designing and analyzing these complex systems. The World Wide Web serves as a prime example of a distributed system, highlighting the practical applications and challenges faced in real-world scenarios.

Unit 2: Architecture :

Distributed Systems: Architecture (4 Hours)

2. Architecture

The architecture of a distributed system defines how its components interact, communicate, and coordinate to achieve the system’s goals. It provides a blueprint for system design, enabling scalability, reliability, and flexibility.

2.1 Architectural Styles

Architectural styles represent high-level designs for organizing components within a distributed system. Common styles include:

-

Layered Architecture

- Components are organized into layers, where each layer provides services to the layer above and consumes services from the layer below.

- Example: OSI model, where layers like application, transport, and network define the communication stack.

-

Client-Server Architecture

- The system is divided into clients (requesting services) and servers (providing services).

- Advantages: Simplifies resource management and centralizes control.

- Example: Web applications where browsers (clients) interact with web servers.

-

Peer-to-Peer (P2P) Architecture

- All nodes in the system have equal roles, acting as both clients and servers.

- Advantages: Decentralization, scalability, and fault tolerance.

- Example: File-sharing systems like BitTorrent.

-

Service-Oriented Architecture (SOA)

- Composed of loosely coupled services that communicate through standard protocols like SOAP or REST.

- Advantages: Reusability and interoperability.

- Example: Microservices architecture in cloud applications.

-

Event-Driven Architecture

- Components interact by exchanging events, allowing asynchronous communication.

- Advantages: High responsiveness and decoupling of components.

- Example: IoT systems where sensors send data to processing nodes.

-

Microservices Architecture

- The application is decomposed into small, independent services that can be deployed and scaled independently.

- Advantages: Flexibility and fault isolation.

- Example: Netflix’s streaming platform.

-

Hybrid Architecture

- Combines elements of multiple architectural styles to leverage their strengths.

- Example: Modern cloud systems that use microservices with a layered backend.

2.2 Middleware Organization

Middleware acts as a bridge between applications and underlying networks, providing abstractions and hiding the complexities of distributed system operations.

-

Role of Middleware

- Communication: Provides standardized APIs and protocols for inter-process communication.

- Resource Management: Manages resource allocation and synchronization.

- Transparency: Ensures location, access, and replication transparency.

- Scalability: Enables scaling by optimizing communication and resource sharing.

-

Types of Middleware

- Message-Oriented Middleware (MOM): Facilitates communication using message queues.

- Example: RabbitMQ, Apache Kafka.

- Remote Procedure Call (RPC): Enables invoking functions on remote machines as if they were local.

- Example: gRPC.

- Database Middleware: Provides a unified interface for accessing distributed databases.

- Example: ODBC, JDBC.

- Object Middleware: Manages interactions between distributed objects.

- Example: CORBA, Java RMI.

- Service Middleware: Supports service discovery, orchestration, and communication.

- Example: Docker Swarm, Kubernetes.

- Message-Oriented Middleware (MOM): Facilitates communication using message queues.

-

Middleware Challenges

- Ensuring security, scalability, fault tolerance, and efficient resource utilization.

2.3 System Architecture

System architecture in distributed systems defines the structural arrangement of components, their interactions, and their roles.

-

Centralized Architecture

- A single server handles all requests, while clients interact with the server.

- Advantages: Simplifies control and coordination.

- Drawbacks: Limited scalability and single point of failure.

- Example: Traditional client-server systems.

-

Decentralized Architecture

- Responsibilities are distributed among multiple nodes, eliminating single points of failure.

- Advantages: High scalability and fault tolerance.

- Example: Peer-to-peer networks.

-

Hybrid Architecture

- Combines centralized and decentralized approaches.

- Advantages: Balances control and scalability.

- Example: Cloud computing systems using centralized management with decentralized execution.

-

Multi-Tier Architecture

- Components are divided into tiers, each responsible for specific tasks.

- Presentation Tier: User interface.

- Logic Tier: Application processing.

- Data Tier: Database management.

- Advantages: Simplifies development and maintenance.

- Example: E-commerce websites.

- Components are divided into tiers, each responsible for specific tasks.

-

Shared-Nothing Architecture

- Each node operates independently without sharing resources like memory or disk.

- Advantages: High scalability and fault isolation.

- Example: Distributed databases like Apache Cassandra.

-

Replication-Based Architecture

- Data or services are replicated across multiple nodes to enhance reliability and performance.

- Advantages: Reduces latency and ensures availability.

- Example: Content Delivery Networks (CDNs).

2.4 Example Architectures

-

Google File System (GFS)

- A distributed file system designed for large-scale data processing.

- Features: Fault tolerance, scalability, and optimized for large files.

- Components: Master node, chunk servers, and clients.

-

Hadoop Distributed File System (HDFS)

- Part of the Hadoop ecosystem, HDFS provides scalable and reliable storage for big data.

- Features: Data replication, high fault tolerance, and compatibility with MapReduce.

-

Amazon Web Services (AWS)

- A cloud computing platform offering services like EC2, S3, and Lambda.

- Architecture: Built on a hybrid model, leveraging microservices and replication.

-

Blockchain Architecture

- Decentralized ledger technology where transactions are validated by consensus.

- Features: Immutability, transparency, and security.

- Example: Bitcoin, Ethereum.

-

Apache Kafka

- A distributed event-streaming platform.

- Features: High-throughput message handling, fault tolerance, and scalability.

-

IoT Systems

- Example: Smart city infrastructure.

- Architecture: Event-driven architecture using edge devices and centralized cloud platforms.

Conclusion

The architecture of distributed systems is fundamental to their operation, enabling them to meet diverse requirements like scalability, reliability, and performance. From architectural styles like client-server and peer-to-peer to middleware organization and specific system architectures, understanding these components provides a solid foundation for designing robust and efficient distributed systems. Example architectures like GFS, HDFS, and AWS demonstrate the practical application of these principles in real-world scenarios.

Unit 3:Processes :

Distributed Systems: Processes (6 Hours)

3. Processes

Processes are fundamental to the operation of distributed systems. They represent active entities that execute code, manage resources, and perform tasks in the system. In distributed systems, processes may span multiple nodes, coordinate with each other, and handle communication to achieve system objectives.

3.1 Threads

Threads are lightweight execution units within a process that share the same address space but can run independently. They are crucial for improving concurrency in distributed systems.

-

Multithreading in Distributed Systems

- Threads allow processes to perform multiple tasks simultaneously, such as handling multiple client requests.

- Example: A web server uses threads to manage concurrent client connections.

-

Advantages of Threads

- Efficiency: Threads consume fewer resources than processes.

- Responsiveness: Multithreaded applications respond faster by allowing background tasks to run concurrently.

- Parallelism: Leverages multi-core processors for improved performance.

-

Thread Management

- Threads are managed by the operating system or a thread library (e.g., POSIX threads).

- Thread Models:

- User-level threads: Managed by user libraries.

- Kernel-level threads: Managed by the operating system.

- Synchronization Mechanisms: Mutexes, semaphores, and condition variables prevent race conditions and ensure thread safety.

-

Challenges in Threading

- Deadlocks, race conditions, and thread synchronization complexity.

3.2 Virtualization

Virtualization abstracts physical resources to create virtual environments, enabling flexibility and scalability in distributed systems.

-

Types of Virtualization

- Hardware Virtualization: Creates virtual machines (VMs) on physical hardware.

- Example: VMware, Microsoft Hyper-V.

- Operating System Virtualization: Allows multiple isolated user-space instances on a single kernel.

- Example: Docker containers.

- Network Virtualization: Abstracts physical network resources to create virtual networks.

- Example: Software-defined networking (SDN).

- Storage Virtualization: Abstracts physical storage to create logical storage pools.

- Example: SAN, NAS.

- Hardware Virtualization: Creates virtual machines (VMs) on physical hardware.

-

Benefits of Virtualization

- Resource Optimization: Maximizes hardware utilization.

- Isolation: Each virtual environment operates independently.

- Scalability: Quickly scale resources based on demand.

- Portability: Virtual environments can be moved across physical machines.

-

Role in Distributed Systems

- Virtualization enables flexible deployment and management of distributed applications across multiple nodes.

-

Challenges

- Performance overhead and security concerns due to shared resources.

3.3 Clients

Clients are end-user devices or software that request services from distributed systems. They interact with servers to access resources and perform tasks.

-

Types of Clients

- Thin Clients: Minimal functionality; rely heavily on servers for processing.

- Example: Web browsers accessing cloud applications.

- Thick Clients: Perform significant processing locally, reducing server dependency.

- Example: Desktop applications like Microsoft Excel.

- Mobile Clients: Optimized for mobile devices with resource constraints.

- Example: Mobile banking apps.

- Thin Clients: Minimal functionality; rely heavily on servers for processing.

-

Client Responsibilities

- User Interface (UI): Presenting information and collecting user input.

- Communication: Sending requests and receiving responses from servers.

- Data Caching: Temporarily storing data to reduce server load and latency.

-

Challenges in Client Design

- Limited processing power and storage, especially in thin and mobile clients.

- Network dependency and latency issues.

3.4 Servers

Servers are specialized processes or machines that provide services to clients. They handle client requests, perform computations, and manage resources.

-

Types of Servers

- Application Servers: Host business logic and application services.

- Example: Apache Tomcat for Java applications.

- Database Servers: Store, manage, and query data.

- Example: MySQL, MongoDB.

- Web Servers: Handle HTTP requests and deliver web content.

- Example: Nginx, Apache HTTP Server.

- File Servers: Provide shared access to files.

- Example: Network Attached Storage (NAS).

- Application Servers: Host business logic and application services.

-

Server Models

- Iterative Servers: Handle one request at a time; simple but limited concurrency.

- Concurrent Servers: Handle multiple requests simultaneously using threads or processes.

- Event-Driven Servers: Use asynchronous I/O to efficiently manage requests without blocking.

-

Server Responsibilities

- Processing Requests: Execute tasks requested by clients.

- Resource Management: Allocate and manage CPU, memory, and storage.

- Security: Authenticate clients and ensure data integrity.

-

Challenges in Server Design

- Handling high loads, ensuring fault tolerance, and maintaining security.

3.5 Code Migration

Code migration involves moving executable code between machines in a distributed system. It enhances flexibility, load balancing, and resource utilization.

-

Types of Code Migration

- Process Migration: Moving an entire process, including its execution state, from one machine to another.

- Thread Migration: Transferring a thread to execute on a different machine.

- Code Shipping: Sending code to a remote machine for execution.

- Example: Sending a JavaScript function to a web browser.

-

Advantages of Code Migration

- Load Balancing: Redistribute tasks to avoid overloading a single machine.

- Reduced Latency: Move computation closer to the data or users.

- Resource Optimization: Leverage underutilized resources in the system.

-

Mechanisms of Code Migration

- Strong Mobility: The code and its execution state are transferred.

- Weak Mobility: Only the code is transferred, and execution restarts on the new machine.

-

Challenges in Code Migration

- State Transfer: Ensuring the accurate transfer of process states.

- Security: Preventing unauthorized access to code or data during migration.

- Heterogeneity: Adapting to differences in hardware or software environments.

-

Examples of Code Migration

- Mobile Agents: Self-contained programs that move between nodes to perform tasks.

- Example: Web crawlers.

- Edge Computing: Code migration to edge devices for real-time processing.

- Cloud Systems: Virtual machines or containers migrating between cloud servers.

- Mobile Agents: Self-contained programs that move between nodes to perform tasks.

Conclusion

Processes in distributed systems, whether threads, clients, servers, or migrated code, are critical for system operation. Threads enhance concurrency, virtualization enables efficient resource utilization, clients and servers provide essential system interaction, and code migration improves flexibility and load management. Understanding these components is vital for designing robust and efficient distributed systems.

Unit 4: Communication : :

Distributed Systems: Communication (Detailed Notes)

4. Communication

Communication is a key aspect of distributed systems. It enables the exchange of information between processes running on different nodes in a network. This section explores various communication paradigms and their implementation in distributed systems.

4.1 Foundations

Key Concepts

- Communication Paradigms:

- Message Passing: Exchange of messages between processes.

- Shared Memory: Shared address space for communication.

- Challenges: Latency, bandwidth, reliability, ordering, and failure handling.

- Protocols: Standardized rules for data exchange (e.g., TCP/IP, UDP).

- End-to-End Communication: Ensures delivery, ordering, and flow control.

Synchronous vs Asynchronous Communication

- Synchronous: Sender waits for the receiver to acknowledge the message.

Example: Blocking Remote Procedure Call (RPC). - Asynchronous: Sender continues without waiting for acknowledgment.

Example: Non-blocking message queues.

4.2 Remote Procedure Call (RPC)

Overview

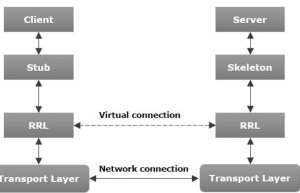

RPC abstracts communication in distributed systems by allowing a program to execute a procedure on a remote system as if it were local.

Steps in RPC:

- Stub Generation: Proxy code that hides communication details.

- Marshalling: Serializing data into a format for transmission.

- Transmission: Data is sent over the network.

- Execution: Remote server executes the procedure.

- Response: Results are sent back to the client.

Advantages

- Simplifies distributed programming.

- Abstracts networking details.

Challenges

- Latency: Due to network transmission.

- Failure Handling: Handling server or network failures.

Optimizations

- Caching: Reduce remote calls for frequently accessed data.

- Asynchronous RPC: Improve performance by non-blocking calls.

4.3 Message-Oriented Communication

Definition

A communication model where processes exchange discrete messages. This model is used in systems requiring high decoupling and asynchronous communication.

Message Queue Systems

-

Producer/Consumer Model:

- Producers send messages to queues.

- Consumers retrieve messages from queues.

-

Features:

- Persistence: Messages stored for reliability.

- Prioritization: High-priority messages processed first.

Examples:

- Message Brokers: RabbitMQ, Kafka.

- Protocols: AMQP, MQTT.

Challenges

- Handling duplicate messages.

- Ensuring exactly-once delivery in distributed queues.

4.4 Multicast Communication

Definition

A communication mechanism where a single message is sent to a group of recipients.

Multicast Models

- Application-Level Multicast: Handled by the application layer.

- Network-Level Multicast: Handled by network routers using protocols like IP Multicast.

Reliable Multicast

- Ensures message delivery to all recipients.

- Handles message duplication, ordering, and fault tolerance.

Use Cases

- Distributed databases.

- Collaborative applications (e.g., multiplayer games).

Challenges

- Network congestion.

- Group management for dynamic membership.

4.5 Case Study: Java RMI and Message Passing Interface (MPI)

Java RMI (Remote Method Invocation)

-

Overview:

- Allows Java objects to invoke methods on remote Java objects.

- Built on the RPC model.

-

Components:

- Stub and Skeleton: Proxy objects for client and server communication.

- RMI Registry: Service to locate remote objects.

-

Process:

- Client obtains a reference to the remote object from the RMI registry.

- Calls methods on the reference, which are executed remotely.

-

Features:

- Object serialization.

- Transparent communication.

Message Passing Interface (MPI)

-

Overview:

- A standard for message-passing used in high-performance computing.

-

Communication Primitives:

- Point-to-Point Communication: Messages sent between specific nodes.

- Collective Communication: Messages sent to groups of nodes.

-

Features:

- Language-independent.

- Supports parallel computation.

-

Examples:

- MPI_Send(), MPI_Receive() for message exchange.

- MPI_Bcast() for broadcasting.

Comparison

| Feature | Java RMI | MPI |

|---|---|---|

| Abstraction | Method invocation | Message exchange |

| Ease of Use | High | Medium |

| Scalability | Moderate | High (HPC systems) |

| Use Case | Enterprise apps | Scientific computing |

Summary

The communication mechanisms in distributed systems range from basic message-passing models to advanced paradigms like RPC and multicasting. By leveraging tools like Java RMI and MPI, developers can build robust and scalable distributed applications suited to their specific needs.

Unit 5:Naming :

Distributed Systems: Naming (Detailed Notes)

5. Naming

Naming is a fundamental concept in distributed systems, as it provides a way to identify, locate, and manage resources. It allows processes, services, and devices to interact without having to directly reference physical or network locations.

5.1 Name Identifiers and Addresses

Name

- A name is a human-readable label used to refer to an entity (e.g., file, server, device).

- Example: A file name (“report.docx”) or a domain name (“example.com”).

Identifiers

- Unique labels assigned to entities to distinguish them.

- Examples: Process ID (PID), object references in programming.

Address

- Specifies the location of an entity, often tied to its physical or network position.

- Example: IP address (“192.168.1.1”) or memory address in computing.

Relationship Between Name, Identifier, and Address

- Name → Identifier → Address:

- A name is mapped to an identifier.

- The identifier is further resolved to an address.

- Example:

- Name:

www.example.com - Identifier: DNS record for the website.

- Address:

93.184.216.34(IP address).

- Name:

Key Challenges

- Uniqueness: Ensuring no two entities share the same identifier.

- Persistence: Names remain valid even when the address changes.

5.2 Structured Naming

Hierarchical Naming

- Definition: Names are structured in a hierarchy for scalability and manageability.

- Examples:

- File systems:

/home/user/documents/file.txt. - Domain Name System (DNS):

www.subdomain.example.com.

- File systems:

Flat Naming

- Definition: Names have no specific structure, often represented as unique identifiers.

- Examples:

- UUIDs (Universally Unique Identifiers).

- Content-based addressing: Hashes used in content delivery networks.

Decentralized Naming

- Definition: Naming responsibilities are distributed across multiple nodes.

- Examples:

- Peer-to-peer systems: BitTorrent file hashes.

- Distributed Hash Tables (DHTs): Chord, Kademlia.

Design Considerations

- Scalability: Hierarchical naming is scalable for large systems.

- Resilience: Flat or decentralized naming systems are more fault-tolerant.

5.3 Attribute-Based Naming

Overview

- Instead of explicitly naming entities, attribute-based naming identifies entities based on their properties or metadata.

- Example: Searching for a printer with attributes:

color = true, location = 2nd floor.

Components

- Attributes: Descriptive properties (e.g., file type, date created).

- Search Mechanism: Querying based on attributes.

Advantages

- Flexibility: Enables dynamic resource discovery.

- Expressiveness: Allows queries using rich metadata.

Use Cases

- Directory Services: LDAP (Lightweight Directory Access Protocol).

- Cloud Services: Resource tagging for virtual machines, storage, etc.

Challenges

- Scalability: Efficiently indexing and searching large datasets.

- Consistency: Handling updates to attributes in distributed settings.

5.4 Case Study: The Global Name Service (GNS)

Overview

The Global Name Service (GNS) was designed to provide a scalable, distributed naming system for large networks.

Features

-

Hierarchical Namespace:

- Divides names into domains for scalability.

- Example:

edu.mit.cs.

-

Location Independence:

- Names are resolved to addresses dynamically, allowing resources to move without changing their name.

-

Replication and Fault Tolerance:

- Data replicated across multiple servers to ensure availability and reliability.

Key Components

-

Name Resolution:

- Translates human-readable names into machine-usable identifiers or addresses.

-

Caching:

- Improves performance by storing resolved names locally.

-

Administrative Domains:

- Allows decentralization of management responsibilities.

How GNS Works

- A user queries a name (e.g.,

printer.sales.company.com). - The query traverses the namespace hierarchy, starting from the root.

- The GNS resolves the name to its corresponding address or identifier.

Benefits

- Simplifies resource discovery and management in large-scale distributed systems.

- Supports dynamic environments where resources frequently change locations.

Comparison with DNS

| Feature | GNS | DNS |

|---|---|---|

| Scalability | High | High |

| Dynamic Binding | Supported | Limited (static entries) |

| Fault Tolerance | Replication supported | Replication supported |

| Use Case | General distributed systems | Internet domain resolution |

Summary

Naming in distributed systems provides a mechanism to identify and locate entities, facilitating efficient communication and resource management. From structured and attribute-based naming to the design of global services like GNS, the naming models ensure scalability, fault tolerance, and ease of use in diverse distributed environments.

Unit 6: Coordination :

Distributed Systems: Coordination (Detailed Notes)

6. Coordination

Coordination in distributed systems ensures that processes running on multiple nodes can work together efficiently and consistently. This involves synchronizing actions, ordering events, managing resources, and handling communication.

6.1 Clock Synchronization

Overview

Clock synchronization is crucial for ordering events and maintaining consistency across distributed systems since no universal clock is available.

Key Concepts

- Clock Drift: Differences in clock speeds among nodes.

- Clock Skew: Differences in absolute time values between clocks.

Types of Synchronization

- External Synchronization: Aligns clocks with an external reference (e.g., UTC).

- Example: Network Time Protocol (NTP).

- Internal Synchronization: Ensures clocks within the system are consistent relative to each other.

Techniques

- Cristian’s Algorithm: Synchronizes a client’s clock to a server’s clock using round-trip message time.

- Berkeley Algorithm: Synchronizes all nodes to a common time by averaging their clocks.

- NTP (Network Time Protocol): A hierarchical synchronization protocol widely used on the Internet.

6.2 Logical Clocks

Overview

Logical clocks provide a mechanism for ordering events in distributed systems without relying on physical clocks.

Lamport Timestamps

- Concept: Assigns a logical timestamp to each event, ensuring causality (

happens-beforerelationship).- Rule: If event A causes event B, then

A → B. - Timestamp Rule: T(A) < T(B).

- Rule: If event A causes event B, then

- Algorithm:

- Increment local clock before executing an event.

- Include the timestamp in messages and update the local clock upon receipt.

Vector Clocks

- Concept: Extends Lamport’s idea by using a vector of timestamps for precise causality tracking.

- Algorithm:

- Each process maintains a vector of logical clocks.

- Update vector on events and message exchanges.

- Advantages: Tracks concurrent events explicitly.

6.3 Mutual Exclusion

Overview

Mutual exclusion ensures that only one process accesses a critical section (shared resource) at a time.

Algorithms for Mutual Exclusion

-

Centralized Algorithm:

- A coordinator grants access to the critical section.

- Advantages: Simple, low message overhead.

- Disadvantages: Single point of failure.

-

Ricart-Agrawala Algorithm (Distributed):

- Processes send requests to all others before entering the critical section.

- Uses logical clocks to order requests.

- Disadvantage: High message overhead.

-

Token-Based Algorithm:

- A unique token is passed around. The holder can enter the critical section.

- Advantages: Reduces message overhead.

- Disadvantages: Token loss requires recovery mechanisms.

6.4 Election Algorithms

Overview

Election algorithms ensure that one process is designated as the coordinator or leader.

Types of Election Algorithms

-

Bully Algorithm:

- The process with the highest ID becomes the coordinator.

- Steps:

- A process sends election messages to all higher-ID processes.

- If no response, it declares itself the leader.

-

Ring Algorithm:

- Processes are arranged in a logical ring.

- Election messages are passed around until a single leader is chosen.

Challenges

- Fault tolerance: Detecting and handling failures.

- Scalability: Efficient algorithms for large systems.

6.5 Location Systems

Overview

A location system helps locate resources, nodes, or processes in a distributed environment.

Types of Location Systems

- Directory-Based Systems: Centralized or distributed directories map resource names to locations.

- Decentralized Systems: Use distributed hash tables (DHTs) or gossip protocols.

- Geolocation Systems: Physical location tracking using GPS or similar technology.

Examples

- DNS for resource discovery.

- Geo-distributed systems for content delivery.

6.6 Distributed Event Matching

Overview

Distributed event matching ensures that events generated in one part of the system are delivered to relevant subscribers.

Publish-Subscribe Systems

- Topic-Based: Subscribers register for predefined topics (e.g., “news”, “sports”).

- Content-Based: Subscribers specify conditions (e.g., “price > 100”).

Challenges

- Scalability: Efficiently matching events for a large number of subscribers.

- Consistency: Ensuring all relevant subscribers receive the event.

Examples

- Event brokers like RabbitMQ or Kafka.

- Notification services in cloud platforms.

6.7 Gossip-Based Coordination

Overview

Gossip protocols enable decentralized and robust coordination by disseminating information in a peer-to-peer manner.

Mechanism

- Each node periodically shares information with a randomly chosen neighbor.

- Over time, information spreads throughout the network like a “gossip.”

Applications

- Failure Detection: Nodes share the status of neighbors.

- Membership Management: Nodes gossip about the addition or removal of members.

- Data Replication: Updates are disseminated via gossip.

Advantages

- Scalability: Works well in large, dynamic systems.

- Fault Tolerance: Resistant to node failures.

Challenges

- Convergence Time: Time taken for information to reach all nodes.

- Overhead: Redundant message transmissions.

Summary

Coordination in distributed systems is vital for maintaining consistency, managing resources, and ensuring proper synchronization among processes. From clock synchronization and logical clocks to mutual exclusion and gossip-based protocols, these techniques provide a comprehensive toolkit for handling the challenges of distributed environments.

Unit 7:Consistency and Replication :

Distributed Systems: Consistency and Replication (Detailed Notes)

7. Consistency and Replication

Consistency and replication are fundamental in distributed systems to ensure availability, fault tolerance, and performance. Replication involves maintaining multiple copies (replicas) of data or services, while consistency ensures all replicas present a unified and reliable view to the users.

7.1 Introduction

Why Replication?

- Fault Tolerance: Ensures system reliability by providing backups.

- Performance: Reduces latency by placing replicas closer to users.

- Scalability: Balances load by distributing requests across replicas.

Challenges in Replication

- Consistency: Ensuring replicas stay synchronized.

- Concurrency: Managing concurrent updates to replicas.

- Fault Tolerance: Handling node failures without data loss.

7.2 Data-Centric Consistency Models

Overview

Data-centric consistency models define the rules for maintaining consistency among data replicas visible to the entire system.

Strict Consistency

- Definition: Any read operation returns the most recent write, as if executed on a single system.

- Challenges: Requires global synchronization; impractical in distributed systems due to latency.

Linearizability

- Definition: All operations appear to execute atomically in some order, consistent with real-time ordering.

- Use Case: Systems requiring strong guarantees like financial transactions.

Sequential Consistency

- Definition: Operations appear in the same order to all processes, but the order may not follow real time.

- Example: Distributed databases with consistent ordering of operations.

Causal Consistency

- Definition: Operations that are causally related are seen in the same order by all processes.

- Example: Social media updates with dependency (e.g., comments following a post).

Eventual Consistency

- Definition: Replicas converge to the same state eventually, assuming no updates are made for a period.

- Example: DNS and content delivery networks (CDNs).

7.3 Client-Centric Consistency Models

Overview

Client-centric models focus on the perspective of individual clients, ensuring consistency based on their interaction with the system.

Types of Client-Centric Models

-

Monotonic Reads:

- Guarantees that once a client reads a value, it will never see an older value in subsequent reads.

- Use Case: Systems where users expect a consistent view of data, such as email.

-

Monotonic Writes:

- Ensures write operations by a client are performed in the order issued.

- Use Case: Version control systems.

-

Read-Your-Writes:

- Guarantees that after a client writes to a data item, it will see the updated value in subsequent reads.

- Use Case: Collaborative editing tools.

-

Writes Follow Reads:

- Ensures any updates based on a read are applied to the same version of the data.

- Use Case: Shopping carts in e-commerce systems.

7.4 Replica Management

Overview

Replica management deals with creating, updating, and maintaining replicas in a distributed system.

Key Concepts

-

Replica Placement:

- Static Placement: Fixed locations for replicas.

- Dynamic Placement: Adjusting replica locations based on workload.

-

Replica Types:

- Primary Replicas: Original source of data updates.

- Secondary Replicas: Serve as backups or read-only copies.

Replication Strategies

-

Active Replication:

- All replicas process updates simultaneously.

- Use Case: Fault-tolerant systems.

-

Passive Replication:

- A primary replica handles updates, and changes are propagated to secondary replicas.

- Use Case: Systems prioritizing performance over strict consistency.

Challenges

- Synchronization between replicas.

- Managing storage and communication overhead.

7.5 Consistency Protocols

Overview

Consistency protocols enforce consistency rules across replicas.

Types of Protocols

-

Primary-Backup Protocols:

- A primary replica handles updates and propagates them to backups.

- Ensures linearizability but introduces a single point of failure.

-

Quorum-Based Protocols:

- Requires a majority of replicas (quorum) to agree on operations.

- Use Case: Distributed databases like Cassandra.

-

Paxos and Raft (Consensus Algorithms):

- Ensure consistency through agreement among nodes.

- Use Case: Leader election and distributed state machines.

-

Gossip Protocols:

- Updates are propagated through random peer-to-peer communication.

- Use Case: Scalable, loosely consistent systems.

7.6 Caching and Replication in Web

Overview

Caching and replication improve web performance and scalability by reducing response times and offloading servers.

Caching

- Definition: Storing frequently accessed data closer to the client.

- Examples: Browser caches, edge caches in CDNs.

- Techniques:

- Time-to-Live (TTL): Expiration time for cached data.

- Validation: Ensures cached data is fresh using mechanisms like

ETagorLast-Modified.

Replication

- Definition: Maintaining multiple servers to handle requests.

- Examples: Content replication in CDNs.

- Replication Techniques:

- Full Replication: All servers hold the complete dataset.

- Partial Replication: Servers hold subsets of data.

Benefits of Caching and Replication

- Reduces latency by serving requests from nearby locations.

- Balances load across servers.

- Enhances fault tolerance.

Challenges

- Ensuring consistency between replicas and caches.

- Managing cache invalidation.

Summary

Consistency and replication are critical for maintaining the reliability and efficiency of distributed systems. By employing various consistency models, protocols, and caching techniques, distributed systems can balance trade-offs between performance, fault tolerance, and consistency. These mechanisms form the backbone of modern systems, including distributed databases, cloud services, and content delivery networks.

Unit 8: Fault Tolerance :

Distributed Systems: Fault Tolerance (Detailed Notes)

8. Fault Tolerance

Fault tolerance is the ability of a distributed system to continue functioning correctly in the presence of faults. This ensures system reliability, availability, and consistent operation even when components fail.

8.1 Introduction to Fault Tolerance

What is Fault Tolerance?

Fault tolerance enables a system to detect, recover from, and adapt to faults while maintaining operational continuity. Faults can arise due to hardware failures, software bugs, or network issues.

Types of Faults

- Transient Faults: Occur temporarily and disappear after a short time (e.g., a dropped network packet).

- Intermittent Faults: Occur sporadically and may recur over time (e.g., unstable hardware connections).

- Permanent Faults: Require manual intervention to fix (e.g., hardware failure).

Fault Tolerance Techniques

- Redundancy: Adding duplicate components (e.g., replicas, backup systems).

- Error Detection: Using checksums, timeouts, and acknowledgments to detect faults.

- Error Recovery: Techniques to restore the system to a consistent state.

- Fault Masking: Hiding faults from users (e.g., through majority voting).

8.2 Process Resilience

Definition

Process resilience ensures that the system continues functioning even when individual processes fail.

Techniques for Process Resilience

-

Replication: Running multiple copies of a process on different nodes.

- Active Replication: All replicas execute the same operations simultaneously.

- Passive Replication: One primary replica executes operations, and backups synchronize periodically.

-

Failure Detection:

- Heartbeats: Regular “alive” signals sent by processes.

- Timeout Mechanism: A process is assumed to have failed if no response is received within a specified time.

-

Process Migration:

- Moving a process to another node in case of failure.

- Example: Virtual machine migration in cloud systems.

-

Checkpointing:

- Periodically saving a process’s state to enable recovery.

8.3 Reliable Client-Server Communication

Challenges in Reliable Communication

- Message Loss: Packets can be dropped due to network issues.

- Message Duplication: Retransmissions can cause duplicate messages.

- Message Corruption: Data may get altered during transmission.

Techniques for Reliable Communication

- Timeouts and Retransmissions: Resend messages if no acknowledgment is received within a specified time.

- Sequence Numbers: Attach sequence numbers to detect duplicate or out-of-order messages.

- Acknowledgments: Ensure message delivery is confirmed by the recipient.

- Two-Phase Commit Protocol: Guarantees transactional consistency across client-server communication.

Protocol Examples

- TCP (Transmission Control Protocol): Ensures reliable, ordered delivery of data.

8.4 Reliable Group Communication

Overview

Group communication involves multiple processes or nodes, often in replicated systems or distributed applications.

Requirements

- Atomicity: Messages are delivered to all group members or none.

- Ordering: Messages must follow a consistent order across the group.

Types of Ordering

- FIFO Ordering: Messages from a sender are delivered in the order sent.

- Causal Ordering: Preserves the causality of messages.

- Total Ordering: Ensures all members see messages in the same order.

Techniques for Reliable Group Communication

- Multicast Communication: Sends messages to multiple recipients. Examples: IP Multicast, Reliable Multicast protocols.

- Consensus Algorithms: Ensures agreement among group members (e.g., Paxos, Raft).

- Failure Detection: Monitors group members for failures and reconfigures the group if necessary.

8.5 Distributed Commit

Overview

Distributed commit ensures that a transaction spanning multiple nodes either commits or aborts across all participants.

Two-Phase Commit Protocol (2PC)

-

Phase 1: Prepare

- The coordinator asks participants to prepare for the transaction.

- Each participant checks if it can commit and replies (

YESorNO).

-

Phase 2: Commit/Abort

- If all participants vote

YES, the coordinator sends aCOMMITmessage. - If any participant votes

NO, the coordinator sends anABORTmessage.

- If all participants vote

Three-Phase Commit Protocol (3PC)

- Adds a “pre-commit” phase to reduce the chances of blocking in case of failures.

Challenges

- Blocking: Participants may wait indefinitely if the coordinator fails.

- Network Partitions: Ensuring commit/abort decisions across disconnected nodes.

8.6 Recovery

Overview

Recovery involves restoring a distributed system to a consistent state after a fault occurs.

Techniques for Recovery

-

Checkpointing

- Periodically saving the state of a process or system.

- Types:

- Coordinated Checkpointing: All processes take a checkpoint simultaneously.

- Uncoordinated Checkpointing: Processes take checkpoints independently, risking inconsistencies.

-

Logging

- Undo Logging: Rolls back changes made during a failed operation.

- Redo Logging: Re-applies operations to reconstruct the desired state.

-

Failure Recovery Protocols

- Rollback Recovery: Reverts processes to a previous checkpoint.

- Forward Recovery: Attempts to continue operation by reconstructing the missing or inconsistent data.

-

Failure Detection and Reconfiguration

- Identify failed components and replace them with backups or replicas.

Examples of Recovery Systems

- Distributed file systems (e.g., HDFS) use replication and checkpointing for recovery.

- Database management systems use transaction logs to ensure ACID properties.

Summary

Fault tolerance is a vital component of distributed systems, ensuring reliability and availability despite failures. Techniques such as replication, reliable communication, distributed commit protocols, and recovery mechanisms allow systems to detect, mask, and recover from faults. By implementing robust fault tolerance, distributed systems can handle a wide range of challenges, from transient faults to permanent failures, while maintaining consistent and reliable operation.

Unit 9: Security :

Distributed Systems: Security (Detailed Notes)

9. Security

Security in distributed systems involves protecting data, communication, and operations against unauthorized access, tampering, and disruptions. It is essential to maintain confidentiality, integrity, availability, and accountability across the system.

9.1 Introduction to Security

Security Objectives

- Confidentiality: Prevent unauthorized access to data.

- Integrity: Ensure data and communications are not tampered with.

- Availability: Ensure resources and services are accessible to authorized users.

- Accountability: Keep a record of actions for auditing and accountability.

Challenges in Distributed Systems

- Decentralized Control: Security mechanisms must work across multiple nodes with no central authority.

- Network Vulnerabilities: Communication over networks is susceptible to interception and attacks.

- Heterogeneity: Diverse platforms and protocols require interoperable security solutions.

- Scalability: Security measures must scale with system size and usage.

9.2 Secure Channels

Definition

Secure channels ensure that communication between distributed system components is private and authenticated.

Mechanisms for Secure Channels

-

Encryption: Protects data from being read by unauthorized parties.

- Symmetric Encryption: Both parties use the same key (e.g., AES).

- Asymmetric Encryption: Uses a public-private key pair (e.g., RSA).

-

Authentication: Verifies the identity of communicating parties.

- Message Authentication Codes (MACs): Ensure message integrity and authenticity.

- Digital Signatures: Verify sender identity and data integrity.

-

Transport Layer Security (TLS): Provides end-to-end encryption and authentication.

- Used in HTTPS for secure web communication.

-

Virtual Private Networks (VPNs): Secure communication over untrusted networks by creating encrypted tunnels.

9.3 Access Control

Definition

Access control restricts who can access resources and what actions they can perform.

Components of Access Control

- Authentication: Establishes the identity of a user or process.

- Methods: Passwords, biometrics, two-factor authentication (2FA).

- Authorization: Defines what authenticated users are allowed to do.

- Implemented using access control policies.

- Auditing: Keeps records of access and actions for monitoring and accountability.

Access Control Models

-

Discretionary Access Control (DAC):

- Owners define access policies.

- Example: File permissions in UNIX systems.

-

Mandatory Access Control (MAC):

- Access is determined by a central authority based on security classifications.

- Example: Military systems with confidential, secret, and top-secret levels.

-

Role-Based Access Control (RBAC):

- Access is granted based on roles assigned to users.

- Example: Admin, manager, and employee roles in enterprise systems.

-

Attribute-Based Access Control (ABAC):

- Access is determined by attributes like user location, time of access, or device used.

- Example: Geo-restricted access policies.

9.4 Secure Naming

Overview

Naming in distributed systems maps human-readable names to resources (e.g., files, servers). Secure naming ensures the authenticity and integrity of this mapping.

Threats to Naming

- Spoofing: An attacker impersonates a legitimate resource.

- Redirection: Users are directed to malicious resources by tampering with mappings.

- Denial of Service (DoS): Attackers flood naming services, disrupting their availability.

Techniques for Secure Naming

-

DNS Security Extensions (DNSSEC):

- Adds digital signatures to DNS responses to verify authenticity.

-

Certificate Authorities (CAs):

- Issue digital certificates that bind public keys to resource names.

- Used in HTTPS to authenticate websites.

-

Name Resolution Protocols with Encryption:

- Example: DNS-over-HTTPS (DoH) encrypts DNS queries to prevent eavesdropping.

9.5 Security Management

Overview

Security management involves the processes, policies, and tools for maintaining the security of a distributed system.

Key Aspects of Security Management

-

Policy Management:

- Define security policies for access control, data protection, and communication.

- Example: Enforcing minimum password complexity or data encryption policies.

-

Key Management:

- Handles the generation, distribution, storage, and revocation of cryptographic keys.

- Example: Using Public Key Infrastructure (PKI) for managing digital certificates.

-

Intrusion Detection and Prevention Systems (IDPS):

- Monitor and detect suspicious activities.

- Example: Detecting unauthorized login attempts.

-

Patch Management:

- Regularly updating software to fix vulnerabilities.

-

Incident Response:

- Procedures for detecting, responding to, and recovering from security incidents.

Tools for Security Management

- Firewalls: Block unauthorized access to systems.

- Antivirus and Anti-Malware: Protect against malicious software.

- SIEM (Security Information and Event Management): Centralized logging and analysis of security events.

Summary

Security in distributed systems is multi-faceted, requiring mechanisms for secure communication, robust access control, reliable naming systems, and effective management strategies. By addressing these areas, distributed systems can protect data, ensure trustworthiness, and maintain consistent operations even in the face of attacks and vulnerabilities.

Syllabus

Course Description

Course Description

The course introduces basic knowledge to give an understanding how modern distributed systems operate. The focus of the course is on distributed algorithms and on practical aspects that should be considered when designing and implementing real systems. Some topics covered during the course are causality and logical clocks, synchronization and coordination algorithms, transactions and replication, and end-to-end system design. In addition, the course explores recent trends exemplified by current highly available and reliable distributed systems.

Course objectives

The objective of the course is to make familiar with different aspect of the distributed system, middleware, system level support and different issues in designing distributed algorithms and finally systems.

Unit Contents

1. Introduction : 4 hrs

1.1 Characteristics

1.2 Design Goals

1.3 Types of Distributed Systems

1.4 Case Study: The World Wide Web

2. Architecture : 4 hrs

2.1 Architectural Styles

2.2 Middleware organization

2.3 System Architecture

2.4 Example Architectures

3. Processes : 6 hrs

3.1 Threads

3.2 Virtualization

3.3 Clients

3.4 Servers

3.5 Code Migration

4. Communication : 5 hrs

4.1 Foundations

4.2 Remote Procedure Call

4.3 Message-Oriented Communication

4.4 Multicast Communication

4.5 Case Study: Java RMI and Message Passing Interface (MN)

5. Naming : 5 hrs

5.1 Name Identifiers, and Addresses

5.2 Structured Naming

5.3 Attribute-based naming

5.4 Case Study: The Global Name Service

6. Coordination : 7 hrs

6.1 Clock Synchronization

6.2 Logical Clocks

6.3 Mutual Exclusion

6.4 Election Algorithm

6.5 Location System

6.6 Distributed Event Matching

6.7 Gossip-based coordination

7. Consistency and Replication : 5 hrs

7.1 Introduction

7.2 Data-centric consistency models

7.3 Client-centric consistency models

7.4 Replica management

7.5 Consistency protocols

7.6 Caching and Replication in Web

8. Fault Tolerance : 5 hrs

8.1 Introduction to fault tolerance

8.2 Process resilience

8.3 Reliable client-server communication

8.4 Reliable group communication

8.5 Distributed commit

8.6 Recovery

9. Security : 4 hrs

9.1 Introduction to security

9.2 Secure channels

9.3 Access control

9.4 Secure naming

9.5 Security Management

Text and Reference Books

References

- A.S. Tanenbaum, M. VanSteen, “Distributed Systems”, Pearson Education.

- George Coulouris, Jean Dollimore, Tim Kindberg, “Distributed Systems Concepts and Design”, Third Edition, Pearson Education.

- Mukesh Singhal, “Advanced Concepts in Operating Systems”, McGraw-Hill Series in Computer Science.

- Ajay D. Kshemkalyani, Mukesh Singhal, “Distributed Computing: Principles, Algorithms, and Systems”, Cambridge University Press

- Christian Cachin, Rachid Guerraoui, Luis, “Introduction to Reliable and Secure Distributed Programming”, Springer

Distributed Systems Old Questions 2023

BCA 6th Semester Distributed System Old Question

Group B

Attempt any SIX questions.

[6×5=30]

- What is middleware? Explain the different architecture styles of distributed systems.[2+3]

- Differentiate between stateful and stateless servers.

- Explain the Berkeley algorithm with a suitable diagram.

- Explain the types of data-centric consistency models.

- Define distributed commit. Explain the two-phase commit with a suitable diagram.[2+3]

- Describe security management in the context of distributed systems.

- Write short notes on (any two):

- a) Sequential consistency

- b) Access control matrix

- c) Message passing interface

Group C

Attempt any TWO questions.

[10×2=20]

- What is a distributed system? Explain the characteristics of distributed systems and different types of distributed systems.[2+4+4]

- What is an election algorithm? Explain the different election algorithms with a suitable diagram.[2+8]

- Explain Remote Procedure Call (RPC) and its working process with a suitable diagram. Furthermore, provide an explanation of message-oriented communication, along with an illustrative example.[5+5]

Distributed Systems Old Questions 2023 Solutions

BCA 6th Semester Distributed System Old Question – Solutions

Group B: Attempt any SIX questions (6 x 5 = 30)

1. What is middleware? Explain the different architecture styles of distributed systems. [2 + 3]

Middleware:

Middleware is software that acts as an intermediary between different software applications or between an application and the operating system in a distributed system. It provides communication and input/output, database management, and other services required for distributed computing.

Different Architecture Styles of Distributed Systems:

-

Client-Server Architecture:

- A centralized server provides services to multiple clients. The server is responsible for managing resources and responding to client requests.

- Example: Web server and web browsers.

-

Peer-to-Peer (P2P) Architecture:

- Every node in the system acts both as a client and a server, sharing resources without a centralized server.

- Example: File-sharing systems like BitTorrent.

-

Three-Tier Architecture:

- Divides the system into three layers: Presentation, Logic, and Data. This allows separation of concerns, where each layer can be managed independently.

- Example: Web applications.

-

Layered Architecture:

- Different system components are organized in layers, each with specific functionality. The lower layers provide services to the upper layers.

- Example: Network protocols like TCP/IP.

-

Service-Oriented Architecture (SOA):

- Distributed systems are built as collections of services that communicate over the network.

- Example: Cloud computing.

2. Differentiate between stateful and stateless servers.

| Criteria | Stateful Server | Stateless Server |

|---|---|---|

| State Information | Maintains the state of the client between requests. | Does not maintain any state information. |

| Session Management | Session is maintained between client and server. | Each request is independent and does not rely on past interactions. |

| Example | Database servers, where sessions are required. | Web servers (HTTP) that treat each request independently. |

| Scalability | Less scalable due to dependency on maintaining states. | More scalable because each request is independent. |

| Failure Handling | Requires handling of session recovery if a failure occurs. | Easier recovery as no session data needs to be restored. |

3. Explain the Berkeley algorithm with a suitable diagram.

Berkeley Algorithm:

The Berkeley algorithm is a method for clock synchronization in distributed systems. It allows a group of machines to synchronize their clocks based on an average time calculated by one of the machines (typically a master).

Steps:

- The master machine sends a message to all other machines in the system asking for their current time.

- Each machine replies with its current time and a timestamp of when it sent the message.

- The master calculates the average time by adding all the received timestamps and its own time.

- The master sends the time difference (offset) to each machine.

- Each machine adjusts its clock by the calculated offset.

Diagram:

Master ---> Machine 1

| |

V V

Machine 2 Machine 3

| |

V V

Average Time --> Adjusted Clocks

4. Explain the types of data-centric consistency models.

Data-Centric Consistency Models: These models ensure that the replicas of data are consistent across a distributed system. The main models include:

-

Strict Consistency:

- All operations are seen in a single global order, and once an update is made, all subsequent reads reflect the new value immediately.

- Challenge: Difficult to implement due to high coordination.

-

Linearizability:

- A form of consistency where all operations appear to happen atomically and in real-time order. Once a write completes, all reads reflect the new value.

- Example: A globally distributed key-value store.

-

Sequential Consistency:

- Operations from all processes appear in some sequential order, but the order of operations does not need to follow real-time order.

- Example: A distributed file system where operations are sequential but not necessarily time-ordered.

-

Causal Consistency:

- Operations that are causally related are seen in the same order across all replicas, while concurrent operations may appear in different orders.

- Example: Social media systems where comments on posts are causally ordered.

-

Eventual Consistency:

- Replicas of data will eventually converge to the same value, but there may be temporary inconsistencies.

- Example: DNS systems, where updates to DNS records may take time to propagate.

5. Define distributed commit. Explain the two-phase commit with a suitable diagram. [2 + 3]

Distributed Commit:

A distributed commit is a protocol used to ensure that a transaction involving multiple distributed systems either commits (i.e., is completed successfully) or aborts (i.e., is rolled back) in all systems involved.

Two-Phase Commit (2PC):

2PC is a distributed commit protocol that ensures consistency in a distributed transaction.

Steps:

- Phase 1 (Prepare):

- The coordinator sends a “prepare” message to all participants.

- Each participant checks if it can commit and responds with “Yes” (can commit) or “No” (abort).

- Phase 2 (Commit/Abort):

- If all participants respond with “Yes”, the coordinator sends a “commit” message. If any participant responds with “No”, the coordinator sends an “abort” message.

Diagram:

Coordinator --> Prepare --> Participant 1

| |

V V

Commit/Abort <-- Participant 2

| |

V V

Final Decision --> Participant 3

6. Describe security management in the context of distributed systems.

Security Management in Distributed Systems:

Security management involves implementing processes, policies, and mechanisms to protect distributed systems from unauthorized access, attacks, and failures.

Key Aspects:

- Authentication: Verifying the identity of users or processes.

- Authorization: Determining what actions authenticated users or processes are allowed to perform.

- Confidentiality: Ensuring that data is not accessed or altered by unauthorized parties.

- Integrity: Ensuring that data remains consistent and unaltered during transmission and storage.

- Audit and Monitoring: Keeping track of system activities for detecting potential security threats and maintaining accountability.

- Key Management: Securely handling the generation, distribution, and storage of cryptographic keys.

7. Write short notes on (any two):

a) Sequential Consistency

- Sequential consistency ensures that the result of any execution of operations on a distributed system is equivalent to some sequential execution of those operations, where the order of operations from different processes is maintained.

- It does not require operations to happen in real-time order but ensures a global order of all operations.

b) Access Control Matrix

- An Access Control Matrix (ACM) is a table used to define the access rights of subjects (users, processes) to objects (files, databases) in a system.

- Each row represents a subject, and each column represents an object. The cells contain the access rights for each subject-object pair, such as read, write, execute.

Group C: Attempt any TWO questions (10 x 2 = 20)

1. What is a distributed system? Explain the characteristics of distributed systems and different types of distributed systems. [2 + 4 + 4]

Distributed System:

A distributed system is a system where multiple independent computers work together to achieve a common goal. These systems communicate and coordinate via a network.

Characteristics:

- Concurrency: Multiple processes or components run simultaneously on different machines.

- Scalability: The system can grow and handle increasing load by adding resources.

- Fault Tolerance: The system continues to function even in the event of failures.

- Transparency: Users or applications are unaware of the underlying distribution and complexity of the system.

- Heterogeneity: Different hardware, operating systems, and network technologies can be used in the system.

Types of Distributed Systems:

- Client-Server Systems: A server provides services to multiple clients (e.g., web servers).

- Peer-to-Peer Systems: All nodes act as both clients and servers (e.g., file-sharing networks).

- Three-Tier Systems: Application logic is separated into three layers: presentation, business logic, and data.

- Cloud Computing: A distributed infrastructure providing scalable and flexible resources via the internet.

2. What is an election algorithm? Explain the different election algorithms with a suitable diagram. [2 + 8]

Election Algorithm:

An election algorithm is used in distributed systems to select a coordinator or leader from a group of nodes.

Types of Election Algorithms:

- Bully Algorithm:

- The node with the highest ID initiates an election.

- If it receives no response from higher nodes, it becomes

the leader.

- Diagram:

Nodes: A, B, C (A has highest ID) Election Process: A --> B, C; A becomes leader.

- Ring Algorithm:

- Nodes are arranged in a logical ring.

- A node initiates the election by passing a token to the next node, which may then pass it along until the highest ID node is selected.

- Diagram:

Ring: A -> B -> C -> D -> A Election process: Token passes through ring until highest ID node is elected.

3. Explain Remote Procedure Call (RPC) and its working process with a suitable diagram. Furthermore, provide an explanation of message-oriented communication, along with an illustrative example. [5 + 5]

Remote Procedure Call (RPC):

RPC allows a program to execute a procedure (function) on a remote server, as if it were a local call. It abstracts the communication complexity between different machines.

Working Process of RPC:

- Client Stub: The client calls a function locally, which is intercepted by a stub (a proxy function).

- Marshalling: The arguments are packed into a message by the client stub.

- Communication: The message is sent over the network to the server.

- Server Stub: The server receives the message and unpacks it, forwarding the call to the actual procedure.

- Return: The result is sent back to the client through the same process.

Diagram:

Client Stub --> Marshalls --> Network --> Server Stub --> Procedure

^ |

|<--------------------- Return -------------------|

Message-Oriented Communication:

Message-oriented communication uses messages as the primary communication medium. Messages are sent asynchronously or synchronously between processes or components in a distributed system.

Example:

In a message queue system (e.g., RabbitMQ), messages are sent to a queue and can be retrieved by consumers. This decouples the sender and receiver, allowing asynchronous communication.